Securing Security

When I talk to folks and tell them what I do and who I do it for, often I get a response like “Wow, that’s got to be really interesting! Securing a security company!” I wanted to explore that response and talk about the way that I’ve been thinking about security.

Alright, thanks for having me! It’s true, I’m going to talk about how I, a security person, wrap my head around thinking about security as someone who works at a security company.

So, long story short, what you’re going to want to do is take your computer, pictured here in the middle, and migrate it to the cloud (pictured on your right) and go ahead and apply some HTTPS to the thing.

Well that about wraps it up, reach me at the end of there are any questions!

Background

I’m kidding, mostly. I mean, it’s true, you should use HTTPS and the cloud is challenging but has enabled so much. But there’s more to this talk than memes.

So for a little bit of background on me, I’m the Director of Platform Security at Threat Stack. Before I was at Threat Stack I worked in the Secure and Resilient Systems Group at MIT Lincoln Laboratory. My work in that group centered around security in cloud networks. Before I was in that group, I helped manage a network used to build and simulate brand new hardware. Pretty cool!

I mention this background not to brag, but rather to let people know that there are many ways to becoming a Security Person.

Also, if you use twitter, you can follow me for quality security content.

So if there’s one thing I hope you get out of this talk, it’s that security in general means nothing if you dont put it in terms of the context you’re working in. In security, this is referred to as having a threat model. I think a lot of security topics are kind of confusing for outsiders, so I like to think of the threat model as your “context” which includes the assumptions you’re making.

When I talk about context, I’m talking about the environment you’re working in. I wrote this talk in a donut shop, so donuts are on my mind, right? I imagine the donut shop has a few concerns for their general security needs: the need to handle payment data correctly, protect against the threat of a thief, make sure they have a legit payroll company and not like, Overnight Paychecks, a company I just made up for this talk. Maybe there’s some interest in preventing employee theft? But, there are folks who have this context pretty well scoped out, and it falls into some well-known patterns.

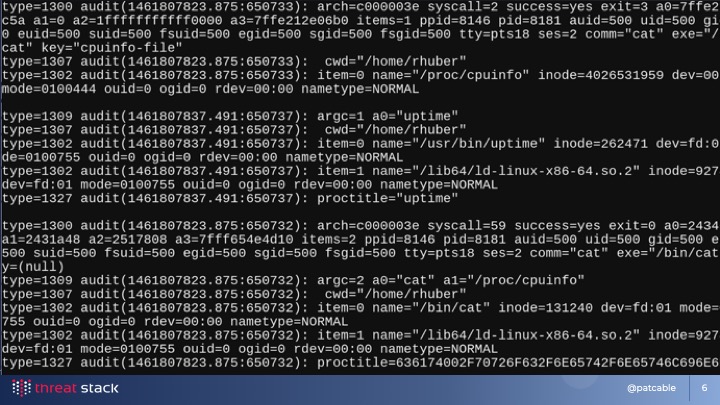

Now, I’m going to briefly give you the context I work in. So there’s this thing called AuditD, and it pulls audit events from Linux’s audit framework. Now, what you’ll notice in this slide is that the output of this is multi-line, not well formatted, theres some fun indentation… the list goes on. So, we wrote our own AuditD that formats events in JSON and sends them up to us.

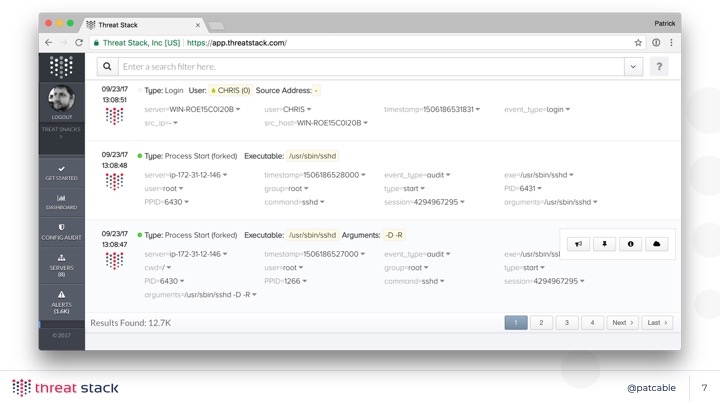

Once we get those events, we alert on them and make them searchable and nice. Isn’t that better? I think so. Find me after the talk if you’d like to know more - but this talk isn’t about what we do.

So given our context, the service we provide, obviously we’re 100% secure, right?

Well, no. And I want to be extremely clear: we have a very, very low margin for error in our context or threat model. The data we collect is sensitive and important. But expectations and words matter, and there just isn’t anything that is a 100% secure system. Creating a 100% secure system is a thought exercise, a fun one that folks I’ve worked with in the past are actually working on building. But those changes go all the way down to how the hardware is built - the processor architectures themselves, and build their way up from there. This is no simple feat. Enterprises with literal aircraft carriers of cash are working on this problem, and it’s likely that whatever they come up with is not going to be generally usable for a long time - not to mention the ecosystem of things we use at our layer of the world.

The reason “100% secure” is a misnomer is because we live in a world of constraints. Sometimes I get to create some of the constraints, sometimes the market does, but any system has boundaries and constraints that make it what it is.

Organizations - startups in specific, but i dont think it’s limited to them - need to be able to push the ball forward. One of the things I get to do in my day job is coordinate third-party code reviews and tests, and I remember being on a readout call and one of the managers said “well, you either get the company right or the code right, but usually if you dont get the company right you dont get to get the code right” which was a great way of framing the problem I think.

And along side of that, my job is to make sure all the things approach industry best practices for security and safety, or exceed it. What does that look like in a small organization?

So with all that intro out of the way, let’s dive into how we started taking what we already had and making it better.

Authentication and Authorization

One easy place to start is with your authentication system. Now, when I got to Threat Stack we used Chef to provision users onto systems, and every user had access to every host. I think that generally, at a young organization, that makes sense, right? What is a role, when you are three people - everyone does everything at that point! But as you grow, it’s easy to change that.

One logical place for many organizations is the split between the front-end code and the back-end code. Your front end folks may not do a ton of back-end work, so keeping them off those hosts is probably not the worst idea one could have.

This isn’t meant to make any statement on if they should be there in the first place - if you have someone working on the entire stack, then make a way for that to happen! Just an idea.

But there are other places you can draw boundaries too. Like, probably not every engineer needs access to the edge load balancing proxies, do they? Or, your data ingest pipeline, or your state storage, if you happen to run your own databases. We ended up collapsing together some of these roles over time, but we kept the edge/internal distinction.

What’s nice about doing this work is that it can make bringing new employees on easier. I know that we should be in a world where we dont log in to hosts at all - but it doesn’t always happen that way. It removes some risk, is all.

How did we accomplish this? Well, we like to joke that we use older proven technology at work - we find new stuff cool, but we tend to wait to adopt newer technology because it’s got less history and operational knowhow behind it.

So, we got some OpenLDAP set up, and put folks into the appropriate access groups. Then

we set up Chef to configure pam_access on each box based on the role of the box. Nothing

overly complicated or special. It just works.

Tooling

The cool thing about LDAP is that it opened the door for a lot of new tools. One of the fun parts about my work is that occasionally I get to build some of those tools!

One of the first things we ran into with the whole idea of limiting access across the infrastructure was: how does on-call work when everyone has differing access to all the things? It seemed like it was possible to glue everything together in a way that would allow us to give folks more access for the on-call time period. We made Deputize, a tool that evaluates the current folks on-call at the time you run it, and adjusts a group in LDAP accordingly. It’s open-source, and available at github.com/threatstack/deputize.

One other thing we implemented but I don’t talk a lot about here is using Yubikeys to store keys used to access our infrastructure. One thing I really liked with having LDAP around was that I can store and maintain control over a lot of things, including SSH public keys. There are existing bash and python mechanisms for doing this, but the bash one felt a bit risky, and the python one would require installing an interpreter that we dont already have on every system. So I wrote one that compiles down to a binary. You can get it on Github.

To use this, take a look at the documentation around AuthorizedKeysCommand

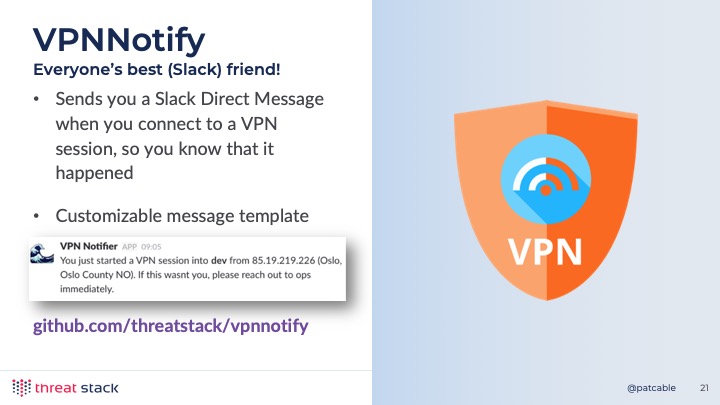

At a previous job, we would receive an email when we connected to the VPN. The idea was that if you got one of these emails and didn’t recognize the connection, that you might raise your hand and say “hey, that wasn’t me.” We do that as well, but with a Slack integration that sends you a message. This too is on Github.

Secrets

Secrets are everywhere in your infrastructure and the people that use it. Managing secrets properly can help you build some interesting things.

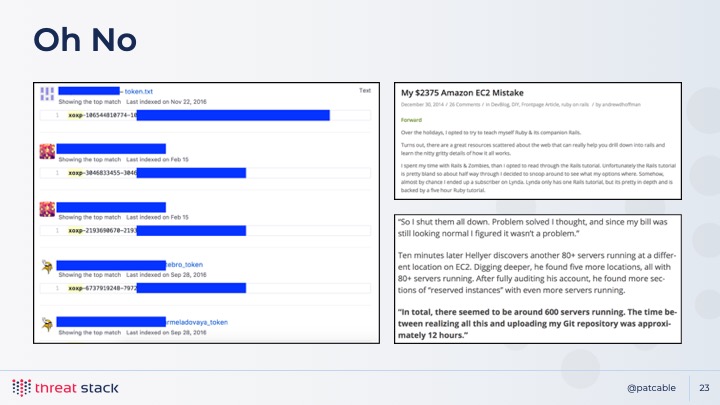

It’s no surprise that accidents happen and people commit their credentials to

GitHub; a tale as old as time. Just typing in xoxp into a GitHub search

is enough to discover a lot of secrets in the world. And, the whole “accidentally

commit AWS secrets to the repo” thing - while there’s more that will protect

against that these days - is still something that can happen entirely by

accident.

We selected Vault because we didn’t want to rely on a cloud providers secret management system. It felt like it would constrain us too much if we decided to use another system. The problem with Vault - and many other generic secret management systems - is that they are often so flexible they can lead to confusion. Some companies (Stripe, Pinterest, Lyft) have created their own secrets management tooling. They’ll be opinionated enough to force you to manage secrets one way.

At the time of this talk we used Goldfish to handle some user-facing secrets. This is a little less necessary as Vault now ships their UI as part of the open source offering.

Ultimately, we made the tooling work for us.

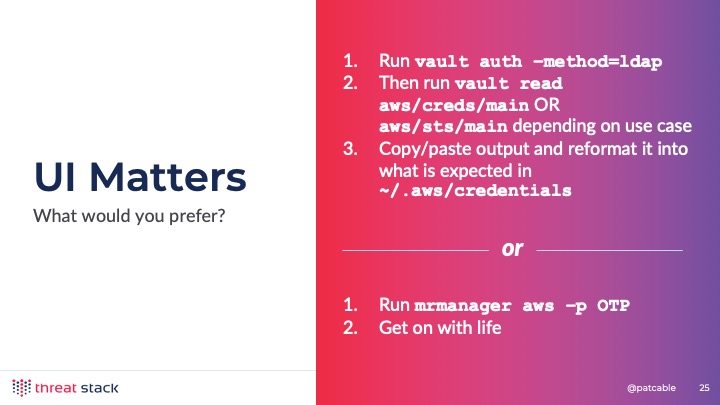

One thing that you’ll need to do with whatever solution you end up choosing is to build tooling around it. Without tooling, your users will have to endure a lot of toil to make use of temporary credentials - log into vault, then copy and paste the credentials to the right file. This is a sure way to make your users frustrated and not want to embrace the cool security things you’re looking to implement.

I have a harder time talking about machine to machine secrets (think of: your application talking to its database). That’s because there’s a lot of different ways you could implement it. You could have your CM tool talk to Vault and write secrets to the “right place.” You could, in an ideal world, integrate the secret management solution directly into the application. The world is your oyster. And as such: choose your own adventure. Or we’ll tell you about our solution, eventually.

Cryptography

So there’s the popular adage recommending that you don’t write your own cryptography - since it’s easy to mess it up and get it wrong. That’s super true. But one thing I found interesting was that you can implement cryptography incorrectly as well. I mean, sure: that seems obvious. But the ways in which it can go wrong is not obvious.

So when I dialed up 1-800-cryptog and was connected to my local

cryptography expert (okay, really, a friend

of mine who I worked with prior), she had mentioned to me that there was

a possibility that if we were storing over 4 billion events with the same

RSA key that I could run into issues if all the encrypted records were leaked.

That’s pretty wild, because I figured as long as I was using a modern cipher and flavor that it’d probably be okay. Not so! Talking to folks was the key here. Where do you find cryptographers? That’s a question I don’t have a good answer to. I’m lucky: I have people. If you are looking, many security firms will know people.

Incident Response

Incident response is a huge part of the job. If you don’t have a plan, things will spiral out of control.

Ultimately, we know that as humans we’re going to mess this whole thing up. Providing your people with the psychological safety to make them feel comfortable with reporting things that are concerning is important. Without it, you know that people will underreport, and you’ll find yourself surprised by really difficult situations to clean up.

Compliance

Compliance is such a mixed bag. On one hand, it demonstrates a company’s commitment to making something work for them. But compliance doesn’t always result in actual security either. The real trick of any compliance exercise is doing the work to figure out how it can fit into your own business. Of course you can do the minimum to pass the audit, and there’s business value in doing enough but not too much.

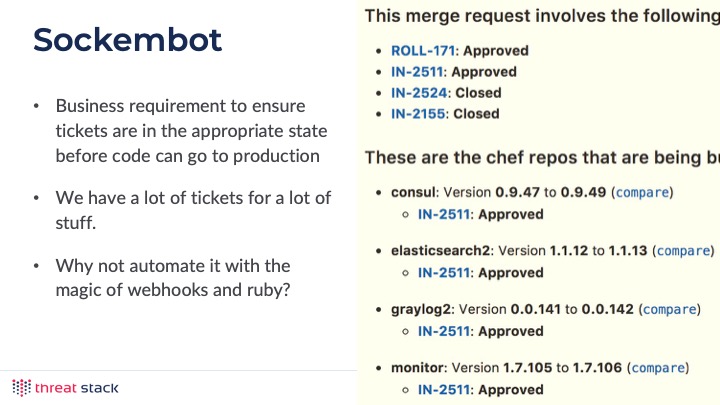

One way that we went and made the process work for us was that we made automated tooling that would enforce our software development lifecycle. In our case, we had Gitlab send a webhook to the

Wrapping up

So a question I get often is like: all of this sounds great, but I don’t have enough of anything to make it happen. I get that. I definitely have less time now than I used to, and its hard to feel like i have time to do all the things.

The best advice I have is to start small. There are tiny things you can do throughout your infrastructure to make significant security wins. Do you enforce 2FA across all your services? How do you handle vendor management? Okay, that might not be so small. But there are things you can do that lead a natural progression towards becoming more secure.

This hiring market is not going to get easier, and if you need to get every advantage you can, part of that advantage is: bringing teams together to learn from each other. Embed your Security folks with your Ops folks. They probably implement things and will raise their hand when something seems off. And if the security folks leverage existing tooling to deploy their solutions and tools, then that’s a win too. It means that other folks can maybe help maintain things.

Automation is always your friend. How can you leverage it to reduce security toil? How can you put automated controls on processes in your infrastructure? Can you glue a few services together to make your job a little easier? It might be possible with a little code.

But besides all of this: your context matters most. If you’re building an app that only sends the word “Yo” to each other and if you only collect an email address and a password (a big if these days) you may have a different threat model or context than me. And that’s okay! It’s the huge reason why I don’t react so wildly to “oh my gosh, that terminal over there runs Windows XP” - I don’t know anything about the network, the infrastructure surrounding it, the context of that device. I mean, none of this is simple. Recognizing that and doing some deep thinking about security’s role in your world is important.

And: that’s how we’re thinking about security at a security company. For today, anyways.

I’m @patcable on Twitter so feel free to ask me about this talk, or anything else!